📢 The leaderboard is constantly updating as we are welcoming new submissions! Please email us!

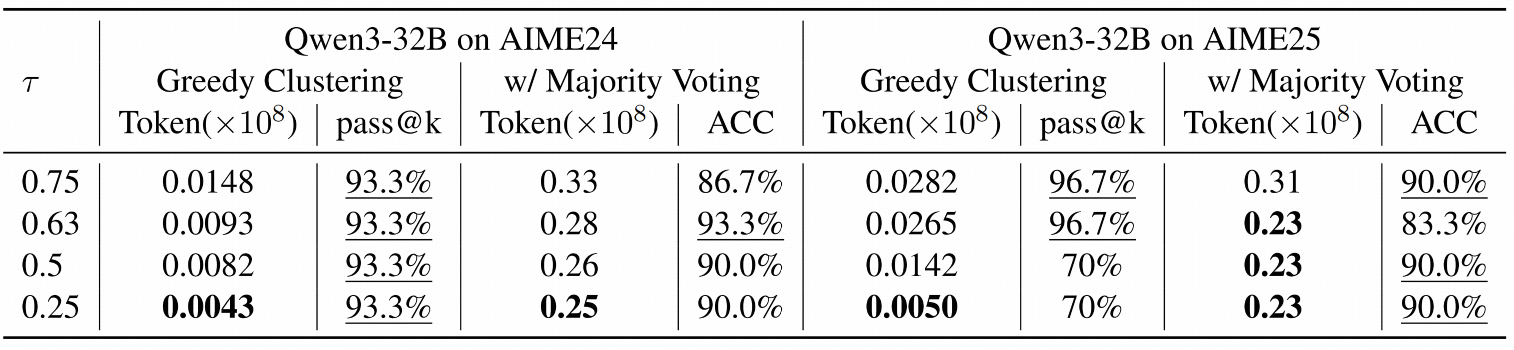

We consider the token consumption and accuracy of three widely-used reasoning models, DeepSeek-8B, Qwen3-32B, and GPT-OSS-20B on three benchmarks:

AIME24: 30 problems AIME25: 30 problems GPQA-Diamond: 198 problems

To view other sorted results, please click on the corresponding cell.

| # | Method | Date | DeepSeek-8B | Qwen3-32B | GPT-OSS-20B | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| AIME24 | AIME25 | GPQA | AIME24 | AIME25 | GPQA | AIME24 | AIME25 | GPQA | ||||||||||||

| Token | Acc | Token | Acc | Token | Acc | Token | Acc | Token | Acc | Token | Acc | Token | Acc | Token | Acc | Token | Acc | |||

| 1 | cons@512📝 Meta |

2025-08-21 |

3.55 | 86.7% | 4.01 | 82.3% | 9.92 | 72.5% | 2.00 | 84.8% | 2.43 | 80.1% | 7.44 | 72.2% | 5.57 | 96.7% | 6.26 | 95.4% | - | - |

| 2 | DeepConf-high📝 Meta |

2025-08-21 |

1.45 | 86.7% | 2.37 | 81.4% | 6.90 | 72.4% | 0.88 | 86.4% | 1.61 | 80.2% | 4.16 | 72.9% | 3.07 | 96.7% | 3.18 | 95.3% | - | - |

| 3 | DeepConf-low📝 Meta |

2025-08-21 |

0.78 | 92.5% | 1.24 | 86.4% | 3.46 | 71.7% | 0.66 | 89.5% | 1.14 | 80.2% | 3.21 | 73.0% | 1.11 | 95.7% | 1.21 | 96.1% | - | - |

| 4 | cons@512 Tsinghua | 2025-10-10 |

3.62 | 86.7% | 4.19 | 83.3% | 10.9 | 66.2% | 1.93 | 86.7% | 2.64 | 80.0% | 6.94 | 70.7% | 2.05 | 93.3% | 2.10 | 90.0% | 4.60 | 70.7% |

| 5 | DeepPrune Tsinghua |

2025-10-10 |

0.42 | 86.7% | 0.35 | 83.3% | 2.54 | 63.1% | 0.26 | 90.0% | 0.23 | 90.0% | 1.00 | 70.2% | 0.42 | 90.0% | 0.38 | 93.3% | 2.20 | 68.7% |

📝 indicates the result is taken from the method's corresponding paper

1*. The comparison is for end-to-end reasoning system that combines the reasoning model with parallel scaling methods like DeepConf or self-consistency (e.g. cons@512). 2*. The token consumption is counted in 10^8. 3*. The cons@512 method means sampling 512 parallel traces with majority voting for final result. 4*. The system with least token consumption is in bold, and the one with highest accuracy is underlined. 5*. If you want to develop new methods, you can refer to our established reasoning trace dataset of the three reasoning models. Based on these existing traces, you can build your own answer aggregation strategies or early stopping methods without running the base reasoning model again.

Last Update: 2025-10-10